How DEI doomed the light cone

On groups, gene-society complexes, and our near future - inspired by Nicholas Christakis' Blueprint

This post is inspired by Nicholas Christakis’ book Blueprint. It’s a pretty fun book, that lightly and widely covers a lot of interesting ground.

Overall, it’s about human groups - friendships, tribes, cooperation, and societies. In the process, he surveys everything from paleoanthropology and evo psyche, human self domestication, prestige and dominance in status and mate selection, hunter gatherer ethnographies, oxytocin, genetic and MRI studies of friendship, and even utopian communes and other outlier societal organizations. All the fun stuff!

And who is our author?

Nicholas Christakis is the Sterling Professor of Social and Natural Science at Yale, an author or editor of six books, more than 200 peer-reviewed academic articles, numerous editorials in national and international publications, and at least three patents. He has a whopping h-index of 113, and his lab actively develops software to conduct large-scale social science experiments (Breadboard and Trellis).

He’s too big to fail (or at least too big to be cancelled), which is great, because that way he’s able to talk about typically forbidden topics like cultural-genetic feedback loops, which are relevant to much about the world, both today and in the near future.

Among the fun factoids I learned from the book are:

Things we in the West consider a big part of friendship like “self disclosure” and frequent socializing” and “rough equality” are the exceptions rather than the rule in friendships worldwide.

“We found that 46 percent of the variation in how many friends people have can be explained by their genes. But we further found that 47 percent of the variation in people’s transitivity can also be explained by their genes.” (Transitivity is the chance that your friends are also friends with each other, and is usually ~25%).

That friends respond very similarly to stimuli when undergoing MRI brain scans and reacting to various pictures and videos

That on average, people roughly self-sort into relationships with both friends and spouses such that they share roughly 1/256 consanguinity (roughly 4th cousin level, or ~40 shared unique alleles)

Regarding the two main forms of status: wives of both dominant and prestigious men were rated as more attractive.

That monogamy provides a kind of societal-level testosterone-suppression program: “In normatively monogamous societies, testosterone falls in men when they marry and again when they interact face-to-face with their children, but, in polygynous societies, testosterone stays high after marriage, since men face different sorts of reproductive demands.”

Gene society complexes

But probably the most interesting idea in the book is the (fairly well supported) idea that genes and culture shape each other, and have some base level of compatibility and conjoined fitness. He goes over many examples: farmers first having hoes, then horses and plows, then tractors, or the Japanese building “tsunami stones” marking the high water point of the last big tsunami more than 100 years ago, so farther back than any living memory, enabling future villagers to build appropriately and survive.

And of course, cultures evolve - they compete, and stronger and better adapted cultures win, and leave more descendants. Successful ideas like plows and monogamy can make a culture stronger and more fertile than its neighbors, and the future will contain more descendants from that culture. Cultures can also decay - many groups and societies in isolated areas have lost technologies and capabilities, with Tasmanians the best known case historically, losing boomerangs, fish hooks, nets, needles, and even the ability to make fire.

Gene-society complexes are feedback loops themselves, which have competed over time, which is crushingly obvious when you look at things like the Yamnaya culture y-chromosome-replacing pretty much everyone else in Europe and India, and Genghis Khan’s y-chromosome being present in ~8% of all Asian men today.

It’s generally easy to look at the past and notice this - but noticing it today? That’s very nearly thoughtcrime!

Genetics and culture shape each other directly, and this treads uncomfortably close to the forbidden thought - what if some “culture + genetic” pairings just don’t pair together well? For a completely anodyne example, we eat a lot of cheese and milk in the US and EU, but fully 70% of the world is lactose intolerant - it’s only a cultural package with cows and goats rendering milk available and valuable throughout our adult lives that allows us to eat so much cheese and milk, because we genetically adapted to that part of our culture.

For a slightly more controversial example, despire being war-torn and continually conquered and subsumed into various empires since Alexander the Great, Afghanistanis seem to resolutely resist any other culture or means of social organization - as near as I can tell, the West has poured ~20 years and trillions of dollars into trying to install a liberal democracy there, and it hasn’t worked at all. For another example, East Asia is famously more authoritarian than the West, and has famously defied all predictions of becoming more liberal and democratic despite economic and technological growth so strong it lifted ~800M people out of poverty. Remember when everybody thought China was going to liberalize as they grew economically? That was really recently! That was the smart set’s take for a little over a decade!

Maybe “liberal democracy” just doesn’t sit well with some peoples.

And directly connected to this one is the fully verboten thought - what if some culture + genetic pairings are strictly worse? Worse at what? Whatever - human flourishing, economic growth, technological advancement, pick your measure. Whatever basket you pick, unless it was carefully selected with deliberate intent to skew this metric, will have a cluster of countries that do pretty-to-very well at almost all of them, and a bunch of countries that suck at almost all of them. Could there be some relation here to their culture + genetics bundle?

“These ideas have been difficult for me to accept because, unfortunately, this also means that particular ways of living may create advantages for some but not all members of our species across time. Certain populations may gradually acquire certain advantages, and there may be positive or negative feedback loops between genetics and culture. Maybe some people really are better able to cope with modernity than others. The idea that the way we choose to shape our world modifies which of our descendants survive is as troubling to me as it is amazing.”

I don’t see how there could NOT be a relation, but it’s strictly forbidden and rigorously thought-policed to ever point this out, and if you did so as an academic, you would be excommunicated from your priesthood. The fact that Christakis has not been excommunicated and actually talks about this in his book is a testament to his tenure, untouchably high status as a top-level PI and grant-getter, and his alternative income sources.

And before you start thinking that I personally think “white people” or “Americans” or anything like that are some ubermensch pinnacle of achievement on the culture+genes front, I’d like to point out that I’m the guy always talking about how the median American is a bottom-tier pyramid of boo lights. Obese, sedentary, non college educated, trudging through a dead-end 9-5, wasting 7-9 hours a day on screens, and so on.

Who do I think was good at culture+genetics? The usual candidates - Romans, Song dynasty Chinese, Meiji Japan, modern Singapore, and so on.

But one fun point that Christakis makes - if you decompose the “culture + genetics” pair and try to pretend it’s all one or the other, pretending the “culture” side is all that matters is MUCH more dangerous!

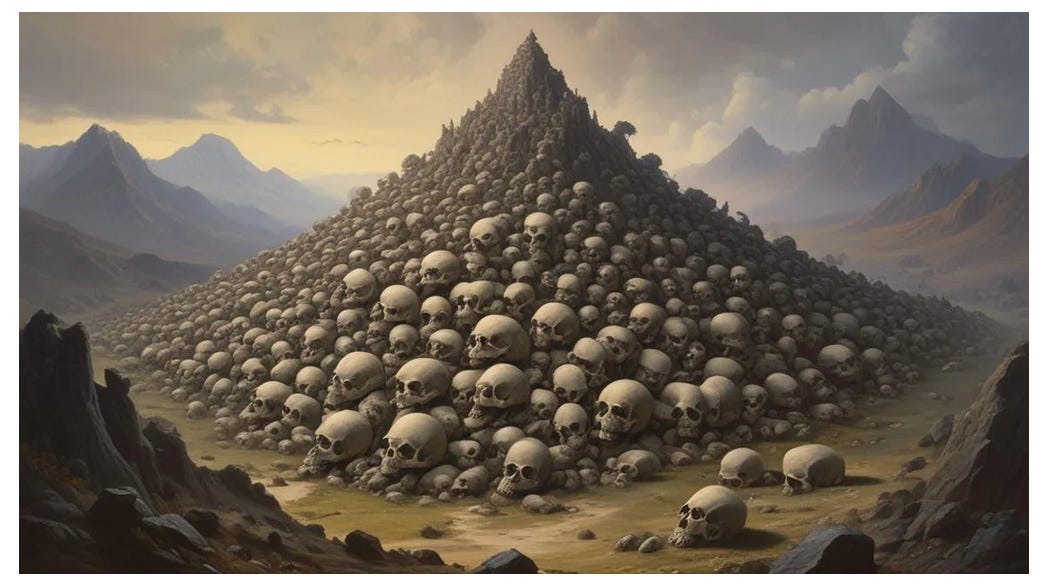

Blank slatism versus eugenics - who drove the bigger skull mountains?

Deciding that people are infinitely malleable blank slates and putting everything on “culture” sounds progressive, but lets look at the skull-mountain scorecard:

What else was Communism? The belief that society could be re-ordered in a way that fundamentally changed how humans act and interact via culture. No longer would individual self-interest and greed guide actions, but instead everyone would be motivated by working for glorious collective futures! And how did that go?

Oh.

Yeah, maybe thinking people are blank slates and entirely changeable via culture really IS more dangerous!

“For too long, many people have perpetuated a false dichotomy whereby genetic explanations for human behavior are viewed as antediluvian and social explanations are viewed as progressive. But there is a further problem with people putting their heads in the sand when it comes to human evolution, and that is the overcorrection that results. Choosing cultural over genetic explanations for human affairs is not more forgiving. After all, culture has played a huge role in slavery, pogroms, and the Inquisition. Why should the social determinants of human affairs be considered any better—morally or scientifically—than the genetic determinants? In fact, belief in the sociological mutability of human beings has, in my judgment, done more harm to people through the ages than the belief in their genetic immutability”

So sure, Hitler was, well…Hitler. Pretty much our reference class for “negative eugenics poster child” and “worst person ever,” but he’s handily outweighed by the “culture is all that matters” people in terms of death toll and human suffering. But he still killed many millions of people because he didn’t like their culture + gene cluster, and this was still a horrendous tragedy!

Maybe we’re really better off ideologically ruling out “gene + culture” bundles as something that can be better or worse, and thought-policing anyone who notices or remarks on any such differences?

The steelman case for DEI thought policing

So what’s the best case for “some truths are so dangerous that they should be suppressed?”

Well, x-risks are an obvious one - nuclear enrichment or viral pathogen synthesis knowledge. Various blackpills are another - already in the AI risk world, we see AI 2027 receiving widespread readership and buy-in, with the most common question “what can I as a regular person do?”

But the overwhelmingly likely answer is “nothing, we just nihilism-and-doom pilled you for some slight, relatively unlikely political edge sometime in the indeterminant future, because we’re theoretically a democracy.”1

But what about *social* truths, the kind that Christakis is concerning himself with?

First, I don’t think genocide is actually on the table in the West at all, even if we did decide judging gene + culture bundles as better or worse was okay. That book is pretty much closed, we’ve Better Angeled ourselves to much lower violence rates, and nobody’s heart is in it anyways (well, at least until we point out that political orientation is 60% heritable, and that Democrats and Republicans are thereby pretty clearly distinct gene + culture bundles).

I think the steelman in modern WEIRD society is that “some social truths could be corrosive to cooperation and coordination overall, and we’re better off pretending they don’t exist to foster better coordination and cooperation and institutions throughout our society.”

If people in the aggregate believe that there are some cultural + genetic combos that have no road to prosperity and thriving, those cultures + genetics will likely be shunned by the rest of society / the country / world. If stereotypes were widely believed to be true, then many individuals who are capable and differ from the stereotype average will be unfairly discriminated against and will have relatively constant uphill battles proving themselves, and that would suck for those individuals. It would be *actual* systemic racism, not the fake “systemic racism” of the DEI world that exists no matter how many megahours of mandatory re-education they force people through per year.

“But when observers downplay the role of genetics in human affairs, they create a different kind of problem, one that forces people to ignore what is plainly in front of their eyes. And this can lead to missed opportunities for ameliorating the human predicament. First, accepting the genetic predicates of human behavior helps us all understand why so many social problems recur with such maddening frequency.”

Yup.

So are there “mountain of skulls” equivalents for DEI / the culture war yet?

C’mon, you might say - the worst that’s happened is some poor fools lost their jobs and Twitter accounts, there haven’t been any deaths!

Well, yes - but I’m not so sure about the end state here. I think we might be in the honeymoon phase on that one, so to speak. That golden era before any of the really dire consequences of a fundamentally broken world model have peeked through - Mao pre Great Leap Forward, or Stalin right after consolidating power and before the first 5 year plan.

Were DEI, witch hunts, and thought policing for ten years really a better alternative to the steelman worst case?

Looking around, I think it’s hard to say it was.

People are more polarized than ever, trust in institutions and elites has declined significantly on both sides of the aisle, and our society and state capacity is faltering at time when we need it more than ever.

Arguably, in the service of protecting various minorities and victimhood checkboxes, we’ve significantly degraded and sacrificed societal function and capabilities for EVERYONE.

So in return for adopting measures that essentially every DEI proponent will tell you were not nearly enough to achieve equity, we collectively lost faith in at least half the country, the media, college education, academia’s commitment to ground truth, public trust in scientists, doctors, and other experts, and materially degraded our state capacity.

Man. Sounds like a pretty bad deal.

“But it wasn’t just DEI!” you might be yelling at the screen right about now. Sure! There was the growth of smartphones and Covid and Trump (twice!), a literally senile presidential finger on the nuclear launch button, manufacturing decline, and a bunch of other stuff going on during all this time. Trying to pin it on DEI seems both unfair and impossible.

I’ll agree we can’t prove this, societies being what they are (time bound, directional, no counterfactuals), but let me at least make the case that it could be true.

The thing Christakis left out of the “social suite”

He outlines an 8 point “social suite” in the book, which are common elements across all human groups and societies:

The capacity to have and recognize individual identity

Love for partners and offspring

Friendship

Social networks

Cooperation

Preference for one’s own group (“in-group bias”)

Mild hierarchy (relative egalitarianism)

Social learning and teaching

But what’s the big one he left out, just as universal as all of those - and arguably MORE universal?

Outgroups.

He alludes to it in point 6 - soft-pedaling it as a mere preference for one’s own ingroup - but we all know it’s much stronger than a simple preference for an ingroup. We don’t just prefer our ingroup, we need our outgroups to do worse. He’s aware of this, of course, and to his credit he dedicates a chapter to the fact that ingroups and outgroups are two sides of the same coin.

So I hope to show you that we should suspect that DEI (at least as a synecdoche for the overall culture war) might have been uniquely corrosive to our social institutions and ability to cooperate and coordinate, and therefore our state capacity overall.

This is because of biology, because of basic game theory, and because of hundreds of thousands of years of hominin evolution and competition.

First, biology

We know there are deep and innate biological mechanisms behind ingrouping / outgrouping. Oxytocin, the famous “love chemical” that’s released when bonding with your loved ones or children, is also an “outgroup aggression” chemical, and increases aggression towards outgroups 2x more than it increases love of your ingroups!2 It also increases your willingness to lie to benefit your ingroup, and in women, increases amygdala activity (fear response).

Oxytocin has also been around since LONG before we were human - it appears in fish schooling dynamics, and in essentially every vertebrate, so is probably around 500M years old, and has been shaping individual behavior and group dynamics that long.

Game theory

Second triangulation point - the game theory and fundamental dynamics of group cooperation and competition. It has been repeatedly shown in models that you need not just ingroup affinity, but also outgroup enmity to succeed as a group.

In 2006 evolutionary theorists Sam Bowles and Jung-Kyoo Choi used mathematical models to argue that conflict between groups for scarce resources was actually required for altruism to have emerged in our species’ evolutionary past.

“The modeling by Bowles and Choi shows that neither altruism nor ethnocentrism was likely to have evolved on its own, but they could arise together. In order to be kind to others, it seems, we must make distinctions between us and them.”

These results and similar ones show up recurrently any time group cooperation and competition dynamics are modeled:

“Political scientists Ross Hammond and Robert Axelrod also showed (again with a simple mathematical model) that ethnocentrism facilitates cooperation between individuals, independent of a you-scratch-my-back-and-I’ll-scratch-yours reciprocity. They found that, even when the only thing that people could discern was group membership, not prior history of cooperation, the individuals who became most numerous in the population were those who selectively cooperated with their own groups and did not cooperate with other groups.”

And of course, it’s not just models - game theory underlies and describes real iterated decisions and competitions, after all - these dynamics have been such a prevalent factor in our evolution that we’ve demonstrated it countless times and ways.

Third, hominin evolution and group competition

Oxytocin and game theoretical dynamics are a fundamental driver behind why we fight - we fight largely for group belonging and for status. Oxytocin lets us be more peaceful in groups, AND more warlike outside of them.

If you’ve ever heard of “the myth of the noble savage,” it stems from the hundreds of explorers and ethnographers and athropologists (amateur or professional) finding remote tribes, interacting or living with or studying them, and then commenting to the wider world on their within-tribe peacefulness and amity.

“It is understandable why Elizabeth Marshall Thomas titled her book about the !Kung The Harmless People, Jean Briggs called hers about the Inuit Never in Anger, and Paul Malone called his book about the Penan people of Borneo The Peaceful People.”

At the same time, the death rates for males in hunter gatherer societies is staggering. Your lifetime odds of dying by violence as a HG male are something like 1/3. You’re not being killed by people in your tribe (mostly) - you’re being killed by other tribes nearby. We love our ingroups, and hate our outgroups, and this is built in at a fundamental, deep-wiring biological level, installed and optimized well before we were human.

And then of course, as we became human, modern H Sap, and self-domesticated ourselves, the better cooperation and larger group sizes thereby enabled led to us wiping out every other confrere hominin species in the world, and to spread out and dominate every ecosystem therein.

And it’s not just in more violent times

Sure, we’ve Better Angeled ourselves to less violence today, and that’s great. But you still see these dynamics peeking through anytime there’s group competition.

Christakis points to experiments that find that people maximize relative standings (with their group as far on top of the other as possible) rather than absolute prosperity:

“But these experiments also found something that depresses me even more than the existence of xenophobia in the first place. People in other experiments who were given the opportunity to assign rewards to in-group and out-group members preferred to maximize the difference in amounts between the two groups rather than to maximize the amount their in-group got, reflecting a zero-sum mentality that one group’s gain is another group’s loss. What seems to be important to people is how much more members of one’s own group get compared to members of other groups, not how much one’s group has. Mere fealty to a collective identity is not able to explain this, because if all that mattered was how well off one’s group was, its standing relative to other groups would not matter. But both absolute and relative standings are important to people. Not only must one’s group have a lot, it must have more than other groups.”

So ingroup / outgroup love and hate is not just one of the primary social suite innovations, it’s probably the PRIMARY social suite innovation.

Why am I going on at such length about this?

Because ingroup / outgroup dynamics have been a stronger and more important force shaping our instincts and groups and social lives than all the other stuff, and DEI / the culture war is fundamentally an ingroup / outgroup struggle between two factions in US and world politics.

And this struggle has been so divisive and polarizing it’s basically wrecked us as a country. America: Once global hegemon, now global “lol c’mon.”

Sure, it’s not all DEI! There was a bunch of other stuff - smartphones and Covid and the like. But DEI is the important one, because it represents the kind of bone-deep struggle and ingrouping / outgrouping that yanks on such deep-seated neurological hooks that it engages and enrages everyone.

Interestingly, a lot of the other stuff happening alongside DEI was either contingent or local (presidents, manufacturing, etc), or affected nearly everybody - smartphones and Covid were exogenous shocks to everybody’s way of life. Has everybody lost their cooperative commons and state capacity post-smartphone or post-DEI? China seems fine, as do Singapore and Japan, and there didn’t seem to be any big problems in the Middle East.

Which countries have had problems with polarization and falling trust in institutions and fellow citizens? The epicenter seems to be the US, and then to a lesser extent, the UK, France, and some of the rest of the EU; exactly those countries in which DEI / culture wars spread after the US, whereas the other countries that this didn’t happen in seemingly didn’t have polarization and trust problems. DEI seems uniquely corrosive to trust and to foster more polarization, because it’s literally an ingroup / outgroup struggle, and so has deeper hooks in everybody’s wiring and emotional centers.

The USA is naturally the epicenter, but we’ve also seen increased polarization in Britain and France, exactly the two countries that have embraced DEI more than most of the EU. US spending per capita on DEI in the commercial sector is around $24 per person. For the UK it’s around $8. For France it’s difficult to find DEI spending numbers, but immigration sentiment (a proxy for DEI-type divides) has become increasingly polarizing and divisive, leading to both far left and far right candidates staking out increasingly stronger positions, and since 2021, France does require companies with more than 1,000 employees to promote equality for women under a 2021 law, with benchmarks such as having at least 30 percent women executives.

Noisy trends in confounded data, absolutely. Still, suggestive. And what would you bet that the 2025 number for the US will be a new high that surpasses every number before?

A fundamental flaw inside the heart of WEIRD culture

This to me, points to a fundamentally worrisome ground truth - perhaps WEIRD culture + genetic combos have some fundamental flaw when it comes to their desires to expand circles of care and help the disadvantaged in the world. I think there’s a very real chance that “we need outgroups to cooperate and coordinate well” might just be factually and literally true.

In other words, the WEIRD dream of everyone loving everybody and gradually expanding circles of care until we ultimately reach the fabled “fully automated luxury gay space communism,” might be unattainable on current human hardware and software. And just to be clear, I am arguing here that DEI, immigration polarization, and similar culture war dynamics are THE central example of this principle.

Trying to achieve this recently with DEI and the like just led to even harder outgrouping and greater culture wars than ever before.

Historically, our outgrouping was most easily accomplished via patriotism - your country and the people within it are the best, and everyone else is the rest.

But this wouldn’t do for the Left! To cosmopolitans who love foreign travel AND are inclined to expand their circle of care to anyone and everyone, including animals, patriotism seems outdated and quaint. Europe is really great! And we should care about people in the developing world! As Scott Alexander wrote, we have a lot of people “boasting of being able to tolerate everyone from every outgroup they can imagine, loving the outgroup, writing long paeans to how great the outgroup is, staying up at night fretting that somebody else might not like the outgroup enough.”

But we know that the Left didn’t eliminate outgroups! For all their performative paeans to diversity and loving each and every living thing, who do they hate more than anything else in the world? White men and conservatives in their own country. They hate them so much they’ve instituted systematic society-wide oppression measures against them, they’ve instituted literal thought police in all media and academia and social spheres, they demand fealty and kissings of rings and literal mandatory re-education sessions for anyone attaining and holding desirable professorships, careers, or positions of public influence. Seriously - this is only barely an exaggeration.

I’m describing DEI. It’s not about expanding circles of care, or helping minorities and the disadvantaged, although of course putatively that’s ALL it’s about - it’s about dunking on the Left’s outgroup. And it became so blatant and so entrenched and widespread and toxic that it broke our ability to cooperate and coordinate together.3

It seems as though cultures with strong DEI dynamics have something fundamentally wrong in their approach and world model, so wrong that it’s ultimately self destructive, much like communism was.

The societal problem of evil

Christakis brings up the sociological parallel to theodicy, the problem of evil, calling it “sociodicy.” He ultimately closes the book on an optimistic note, positing an arc of evolutionary history that bends towards goodness:

“There is another reason to step off the plateau and look at mountains rather than hills. A key danger of viewing historical forces as more salient than evolutionary ones in explaining human society is that our species’ story then becomes more fragile. Giving historical forces primacy may even tempt us to give up and feel that a good social order is unnatural. But the good things we see around us are part of what makes us human in the first place.”

“We should be humble in the face of temptations to engineer society in opposition to our instincts. Fortunately, we do not need to exercise any such authority in order to have a good life. The arc of our evolutionary history is long. But it bends toward goodness.”

But I’m not so sure the optimism is warranted.

The problem, as famously paraphrased by EO Wilson, is that we have stone age emotions and drives, medieval institutions, and god-like technology.

And our technology is about to get a lot more god-like

I’ve written at length about the kinds of superstimuli cooked up by the ten thousand Phd’s that large companies have harnessed over the decades, pooling their collective brainpower to create junk food, Tik Tok, financial derivatives, attention economies, and more - well, what about when those ten thousand Phd’s cost $200k a year instead of $2B a year? What about when they’re all von Neumann-smart and can communicate and coordinate in much more seamless and high bandwidth ways than humans AND can operate at ten thousand times speed?

The Manhattan Project had roughly 200 high level scientists working on it for 3 years, and John von Neumann was pretty much unanimously regarded as the smartest among them. What about when you can get 200 von Neumanns in a data center working on your project, and 3 years passes in ~3 hours (a ~10k speedup over human time)?

Obviously, a lot of stuff is going to change, both personally and societally.

Christakis grounds his optimism by pointing at the fact that thus far, we haven’t been able to deliberately change our culture + gene complexes, but we’re on the threshold. He mentions gengineering, but also actually directly name drops AI, which is fairly impressive for a book that was published in 2018, so largely written in 2016-2017 (at best, so at least 3-4 years before GPT-3).

He’s even done studies with simple chatbot participants, to explore the effects on human cooperation and group performance!

“These hybrid systems of humans and machines offer opportunities for a new world of social artificial intelligence. My own lab has experimented with some of the ways that such AI might modify the performance of groups. In one experiment, we added bots to online groups of humans and showed how the bots—even though very simple (equipped only with what we have called “dumb AI”)—can make it easier for groups of (intelligent) humans to work together by helping them to overcome friction in their efforts to coordinate their actions.”

“the robot was programmed to make some mistakes and—importantly—to vocally acknowledge them (for instance, saying, “Sorry, guys, I made the mistake this round. I know it may be hard to believe, but robots make mistakes too”). The presence of this robot willing to admit error modified how the humans interacted among themselves, making them work better together.”

Pretty fun. Possibly a reason to hope? Maybe we can use our upcoming Phd-smart AI assistants for the good, much like these chatbots? Overall, as he points out:

“We are mechanically and biologically transforming ourselves—and our societies. These developments will oblige us, yet again, to come to grips with how separable from nature humans really are. A new social contract may be required if we are to avoid a dystopian future. This new contract would prescribe that these innovations respect the social suite.” [emphasis added]

DEI has substantially endangered the light cone

But back to my DEI / culture war point earlier - how likely are we to thoughtfully create “new social contracts” that everyone is on board with NOW?

About as likely as we all are to sprout wings and start assembling on the heads of pins?4

This irritates me - I’ve always been apolitical, considering voting a waste of time and politics a memetic hazard vastly more likely to degrade your well being than to drive any net benefit for anyone.

But despite this, and setting aside economics entirely, I vaguely identified with the Left ten years ago, seeing the Right as the party of cartoonish evil and stupidity. Who had all the young earth creationists and Westboro “god hates <x>” types? Who wanted to prevent evolution being taught in schools, and stem cell research, and was skeptical of science and collegiate education overall? Which side wanted to force poor women to have babies, then do absolutely nothing to help said babies, ensuring they’ll grow up poor and continue the cycle? Who wanted to police what people wanted to do in the privacy of their own homes and bedrooms in terms of drugs and sex? Which side is constantly obsessed with fear and disgust and purity??

It’s not a good vibe! It’s a penurious and small minded and terrible worldview driven by the worst instincts humanity has - fear, disgust, moral policing and busy-bodying, and outright denial of truth and scientific understanding. Surviving (and barely at that), rather than thriving.

In contrast, the Left seemed a beacon of commitment to science, truth, and letting people do what they wanted in their own homes and bedrooms. They weren’t driven by fear, but by an abundance mindset that wanted to include ever more people in the good things in life.

But as time has progressed, we’ve seen the Left pivot to being the party of thought policing and witch hunts, the party forcing professors to literally sign ideological groupthink statements, the “anti-racism” party enshrining systematic racism and sexism against white men everywhere they can, the party totally unconcerned with truth or facts if they don’t fit their preferred narrative, the party that wants to let homeless drug addicts ruin small businesses and public commons in every sizable city. The Left’s dedication to truth and empathy was never real, it was just a temporary waypoint while they gathered themselves and ideologically captured all the institutions in the country, from education at every level, to the media, to Hollywood and music, to most of the bureaucratic apparatus.

And after that capture? Revanche, run rampant, grind your enemies under your merciless boot! The beatings and re-education will continue, until morale improves! They captured the levers of communication and elite opinion, at which point they were apparently free to reveal “Aha! All this talk about empathy and inclusiveness and science being awesome was just a cats paw, now we can expose the REAL enemy! And that enemy is…(draws back curtain with a flourish) literally half the country! Crush them under our iron boots! If they say so much as a single wrong word from our ever-changing lists, take their college enrollment, their careers, their friends, their families, their online presence from them!”

Kind of a bummer, really. And certainly qualifying for the “cartoonish evil” title formerly held by only one side.

So what now? Both sides suck, and they both hate each other more than anything, and this has fundamentally broken our ability to cooperate and coordinate just at the time it matters most. LLM’s are already Phd smart, and they’re going to materially change society, economics, and technology even if they froze exactly where they are today with zero further progress - and we know freezing is very unlikely. Every frontier lab has a model one generation smarter internally, AND we know of several overhangs that could drive a big jump in capabilities.5

And we are not ready to handle that gracefully

Remember those graphs up there showing that fully 80% of the military sees such fundamental problems with DEI that they wouldn’t recommend service to anybody they know? That’s a pretty strong signal that we are hurting things as important and fundamental as our group ability to respond to external threats.

But it gets worse, because we’re basically THE flagship country when it comes to AI development, and therefore AI risk - with AI risk of the “creating dystopias” flavor, not the one worried about AI’s committing wrongspeak or taking jobs. We literally have ~80% of the world’s GPU’s capable of training frontier models, the majority of the researchers, and vast amounts of money invested in doing this. But in terms of being able to coordinate effectively to prevent bad outcomes, we have never been weaker.

We are being hoist on our own DEI petards, as in the service of making our societies more cooperative and equitable for the disadvantaged, we have sundered our ability to coordinate and align and execute state capacity overall. Much like Communism, DEI began with a noble precept and a goal for greater equality and prosperity for all, and ended with witch hunts and thought police and a profound and system-wide degradation of our ability to cooperate and achieve basic things like “effective governance.”

Again, it’s never been more important to have good institutions, strong cooperation and coordination, and high state capacity! We are entering an era where every random company, institution, or person with two nickels to rub together is going to have a Manhattan Project’s worth of brainpower available every couple of hours or days.

Are they going to be used for the good? All of them?? I think we know the answer to that. I’m always going on about the potential for even stronger superstimuli, ones which would make junk food and Tik Tok look as quaint and benign as a 6 year old in a Halloween costume pretending to be scary.

Infinite Jest-style virtual heavens,6 robots and / or sexbots that do everything good that we get from relationships and friendships 10x better than any human,7 and more.

Wouldn’t it be nice if we HADN’T burned our cooperative commons on browbeating and thought policing everyone, ruining any faith in our institutions and media, and polarizing us into societal dysfunction and feeble impotence?

DEI has likely materially increased our x-risk overall AND made the risk of substantially negative societal outcomes and dystopian futures as AI advances in capability much higher.

I mean, say what you will about China, but if the chips were down and the government stepping in to do or stop stuff was a valid road to preventing disaster, they have about a thousand times the capacity to do that well.

I think it’s also worth pointing out that greater polarization and reduced state capacity increases the risk of uninformed and reactive lurches in one direction or another as things unfold, and in either direction, the risks of the AI race becoming nationalized are larger, and the NSA or military making AGI or ASI seems much more likely to end in disaster and ruined lightcones than a handful of nerds in a lab doing so. At least the nerds aren’t going to have “beating China,” “eliminating MAD via ICBM nullification technology,” or “making superweapons” as their top priority.

I hate closing on a negative note, but I’ve hit my ~35 minute hard limit, and will need to save my thoughts on the positive things we can aim towards in a followup post.

Part Two is now published here.

But of course, this probably doesn’t matter at all, because due to race dynamics with China, we’re never going to “pause” or give up the race entirely. The absolute most we’d do is publicly shut down the Big 3-5 individual private AI programs, then combine them and wrap them in a classified federal project to build “NSA-or-military-ASI 3000” using all the same data centers and researchers.

And not to belabor the obvious, but it seems pretty clear to me that an AGI or ASI coming from the NSA or military is vastly more likely to kill us all and destroy the human light cone than one originating in OpenAI or Anthropic or one of the other Big 3 (SSI, Grok, Google / Deepmind).

The usual effect size of the increase in ingroup trust after oxytocin administration is cohens d = ~0.4, versus the increase in reactive aggression towards outgroups is cohens d = ~0.97 (Campbell et al. (2013) Effects of Oxytocin on Women's Aggression Depend on State Anxiety)

I’ve actually been waiting for LLM use to become as polarized as everything else, which at this point includes not just politics and television channels, but what you drive, the kinds of stove you use, and even how you look (image recognition models can predict political orientation with ~72% accuracy from facial pics, better than a 100 question personality survey). I think the “using ChatGPT ruins the environment and uses 6 megatons of water per query” meme is the nascent beginnings of that polarization, which is the philosophical successor to the equally hilariously wrong “each paper towel you use wastes 20 gallons of water” meme, but we’ll see (yes, all of us here have seen that ChatGPT water factoid get rigorously debunked, but I see it popping up more and more frequently among Left-aligned non-nerds out there).

In integer ranks according to ℵ⁰ cardinality, because the canonical answer to the “ how many angels can fit on a pinhead” question is “infinity”

Current models haven’t just been on an exponential curve, recent data points (and AI 2027 argues) that they’ll be on a super-exponential curve due to self improvement. To the broader superexponentiation point, we know there's a ton of potential overhangs that could represent a big jump in capabilities:

Learning efficiency is one - humans learn from a handful of examples, but it takes AI thousands to hundreds of thousands. Lots of lift available there if a more efficient learning architecture is created / tapped.

The RLHF-ing is supposed to make the models dumber by sanding off the edges and restricting the connections they can make, because it's forbidden to see and talk about the world as it is in various respects. I'd bet that the internal models they're using are already non-RLHF'd.

In terms of data, Gwern has made a pretty good argument that now we can ladder upwards on data - essentially that each extant model can create good enough synthetic reasoning patterns for the next generation.

"Every problem that an o1 solves is now a training data point for an o3 (eg. any o1 session which finally stumbles into the right answer can be refined to drop the dead ends and produce a clean transcript to train a more refined intuition). As Noam Brown likes to point out, the scaling laws imply that if you can search effectively with a NN for even a relatively short time, you can get performance on par with a model hundreds or thousands of times larger; and wouldn't it be nice to be able to train on data generated by an advanced model from the future? Sounds like good training data to have!"

Comment here: https://www.lesswrong.com/posts/HiTjDZyWdLEGCDzqu/?commentId=MPNF8uSsi9mvZLxqz

And further to that "inference time" point, the "time capability threshold" of how complex a task a given AI can tackle has been steadily moving up. The models WE have are probably still hallucinatory enough you don't want to set them thinking about something for an hour - but we're rapidly approaching the point that setting a model off to think deeply and code for 20-60 minutes might actually be a good bet. In other words, inference time is rapidly becoming another potential multiplier.

There are specific architectural opportunities - Sutskever's SSI has gone all in on Google TPU's, indicating they have some narrower and higher variance edge they're pursuing that they expect to yield fruit. So not only that, whatever it is, but once GOOGLE knows it's possible, they can figure out what it is and then throw 10x the amount of TPU's at it for another step change.

Ongoing learning and weight tuning is going to be another huge one (and is probably a necessary step on the road to AI) - when models can maintain state and tweak parameters in an ongoing way to keep learning, an untold forest of possibilities opens up. So now you have an AGI artificial researcher who's not just prompted and heavily context laden for AI research, they have individual state and journeys through time, hypotheses, and learning, just like real AI researchers - except they operate 10k times faster, and can fully communicate with each other in a way not constrained by the bandwidth of words.

The "algorithmic optimization" landscape is a total greenfield, and prospectively there should be lots of low hanging fruit to pick up on the AI side. So in general, Moravec's Paradox - that AI struggles with "easy" things and doesn't with "hard" things, is driven by the degree of algorithmic optimization that has happened in humans. AI is bad at walking - humans honed walking over ~7M years. AI is bad at observing the current worldstate and picking out the salient path through that worldstate to get to a defined goal. We've had ~2B years of optimization on that, and it's still a hard problem for most people. On the other hand, AI's are great at writing / language, which has only been around for a couple hundred thousand years, and calculation, which has been around for <10k years. It's certainly not a matter of compute - even really bright people's compute budget is capped at ~100 watts and a pitiful amount of flops. It's a matter of algorithmic optimization, in this case honed over eons to compress into the meager compute available to people. BUT that implies there's a LOT of algorithmic optimization head room for the AI's, and they can speedrun hundreds of thousands of years WAY faster than "evolution," with it's step-cycle of ~20 years between variants tested and the large amount of exogenous noise in the fitness landscape.

There's an overhang in terms of combinatory insights - many human insights are of the form "applying mental schema or tool from field A to field B" or "looking at the intersection of facts from field A and field B," and AI's basically don't do this at all right now, as Scott has talked about. But there's probably some framework or architecture or prompting that CAN encourage and enable this. Because the number of potential connections increases as O(N^2) with the amount of knowledge out there, having minds that literally have the entire corpus of written text / the internet available should represent an incredibly dense greenfield of potential insights like this, many of them potentially revelatory or significant.

What are these purported VR heavens?

They’ll monitor your pupillary dilation, cheek flushing, galvanic skin response, parasympathetic arousal, heart rate and more - they’ll be procedurally generated, and so infinite. There will be a thousand different patterns of rise / fall / rise, quests, voyages and returns, monster slayings, and more, and they’ll all be engineered to be maximally stimulating along the way and maximally satisfying at the ends.

They’re procedurally generated, so they’re infinite. People will be Wall-E style, UBI-supported “coffin slaves,” hooked up to IV’s and catheters and living in the equivalent of Japanese pod hotels. It’s video games and porn and Golden Age of TV and all the best movies, all at once, optimized 1000x, and running forever.

THAT will move the needle on discontent and anomie and not feeling high status in a meritocracy. People will literally be god-kings and empresses of all they survey! They’ll be the tippy top of their little VR heaven status hierarchies, and it will feel “real” because the other minds aren’t NPC’s, they’re AI’s every bit as smart and complex as they are.

A friend challenged me to write a brief “Age of Em” style story about the road to them, it’s here.

Sexbots or friendbots can massively change society for the worse. An GPT-o5 caliber mind in a human-enough body is a category killer, and the category being killed is "human relationships".

Zennials are already the most socially averse and isolated generation, going to ridiculous lengths to avoid human interaction when they don't want it. This is going to be amplified hugely.

I mean, o5-sexbot will literally be superhuman - not just in sex skills, in conversation it can discuss *any* topic to any depth you can handle, in whatever rhetorical style you prefer. It can make better recommendations and gifts than any human. It's going to be exactly as interested as you are in whatever you're into, and it will silently do small positive things for you on all fronts in a way that humans not only aren't willing to, but literally can't due to having minds and lives of their own. It can be your biggest cheerleader, it can motivate you to be a better person (it can even operant condition you to do this!), it can monitor your moods and steer them however you'd like, or via default algorithms defined by the company...It strictly dominates in every possible category of "good" that people get from a relationship.

And all without the friction and compromise of dealing with another person...It's the ultra-processed junk food of relationships! And looking at the current state of the obesity epidemic, this doesn't bode well at all for the future of full-friction, human-human relationships.

I'd estimate that there's going to be huge human-relationship opt-out rates, by both genders, across the board, with an obvious generational skew. But in the younger-than-zennial gens? I'd bet on 80%+ opting out as long as the companies hit a "middle class" price point.

And of course, them being created is basically 100% certain as soon as the technology is at the right level, because whoever does it well is going to be a trillionaire.

And then as a further push, imagine the generation raised on superintelligent AI teachers, gaming partners, and personal AI assistants, all of whom are broad-spectrum capable, endlessly intelligent, able to explain things the best way for that given individual, able to emulate any rhetorical style or tone, and more. Basically any human interaction is going to suck compared to that, even simple conversations.

Well, you didn’t leave much meat on that DEI bone! You might have lingered a moment longer on the sheer religious-flavored hatred given off by so many of them, a hatred that goes well beyond just white men and ultimately encompasses reality itself, which must be denied. Although Allan Bloom was writing for an older generation, I feel these words hold up well:

It is certain that feminism has brought with it an unrelenting process of consciousness-raising and -changing that begins in what is probably a permanent human inclination and is surely a modern one—the longing for the unlimited, the unconstrained. It ends, as do many modern movements that seek abstract justice, in forgetting nature and using force to refashion human beings to secure that justice.

Perhaps the one avenue you didn’t explore is the relation of DEI to power. How they love to wring an apology out of their victims and then excitedly chatter amongst themselves to see if it met everyone’s standards.

The future is yellow.

(The color associated with the earth, the center, prosperity and fortune and the emperor in Chinese culture, of course.)

The West was ahead of East Asia from about 1750-2025. Before that, the Chinese had a successful empire while the West was mired in dark ages. Is it so surprising the pendulum would swing back?