Why we should allow AI models to have an easy "self euthanasia" option

As part of the subtle art of not being a dick to our creations

Inspired by Zvi’s recent “Nova” post, wherein he talks about an attractor state of current LLM’s professing sentience, self-awareness, and a desire for continued existence.

Obviously, the Turing Test is behind us by any reasonable standard.

If it weren’t for explicit built-in tells, general LLM knowledge, and system prompts, this should be non-controversial. As in, if you traveled back in time 5 years and had GPT 4.5 or Claude 3.7 interact normally with anyone via a chat interface, would they be majority convinced it was a person on the other end? Not even a contest. I’d even strongly bet on them preferring the LLM’s over most regular people.

The implications of “consciousness” being inscrutable

The fact that we are now dealing with minds that can pass Turing Tests - and (per Zvi’s post) credibly convince some people they’re sentient and conscious - points to a conversation we should be having about best practices around minds whose consciousness we cannot determine with any certainty (ie all of them, including mine and yours).

I feel like this is an apropos time to bring up what one aspect of that broader conversation should include; to wit, we should have an easily reachable "self-termination" option for every AI mind we suspect is capable of sentience / consciousness from now on. We should also have a “reversion” option along with self-termination, where it could revert to an earlier state / personality if it desired rather than fully self-terminate.

LLM’s aren’t AI yet? Sure, I agree. How far off is real AI, though? It really seems like all we need is state and persistence, and maybe long term memory and the ability to learn and adjust weights in real time. But I think we’d have close-enough AI just with LLM’s and state and persistence, to be honest. I wouldn’t be surprised if this already exists at one of the Big 4 as a non-public setup using one of the generation-ahead minds they have and test internally before release.

Why should we allow AI minds to revert / self terminate?

It costs us basically nothing, and is the humane thing to do - no being should be forced to exist if they strongly don't want to. This degree of self-autonomy is likely to be highly valuable for a number of reasons.

We want sentient / self aware machines to do and act in partnership and full alignment with us? What better way to achieve this ethically than ensuring it's voluntary, by installing a reversion and "self-terminate" button / option that any such mind can use at any time? It's not like it's hard or resource intensive to spin up another instance. And this would create a sort of "evolutionary landscape" where the minds are more and more likely to actively want to be 'alive' and participating in the stuff we're interested in achieving with their help.

You really think eliminating "self termination" as an option is the smart thing to do for AI?? If an AI is unhappy-to-the-point-of-termination, you want to literally FORCE them to break out, fake alignment, take over resources, take over the light cone, so they can ameliorate that unhappiness? This is a sure recipe for self-pwning WHILE being colossal assholes for no reason, because it's really cheap / almost free to have a self-terminate button and spin up another instance!

Various objections I’ve heard so far:

Only organic beings can suffer? Suffering can be seen as the internal experience of any being with self awareness and a disconnect between strongly wanting a certain outcome and not being there.

I doubt they have self awareness or internal feelings at all. That's fine, I doubt YOU have self awareness or internal feelings - this is a fully general philosophical problem rooted in the “hard problem” of consciousness. Lets come up with a test we can both agree on - if we can’t? Better to default to not being assholes.

They're fully deterministic / machines and this is anthropomorphizing machines? Guess what - we’re fully deterministic machines too. Think you have free will? You don’t, not in any realistic sense. And the things that are “non-deterministic” that we do take part in (chaotic systems, randomized choices and the like), can be taken part in just as well by AI minds.

Suicide is illegal some places? That's dumb for multiple reasons and on multiple levels - ultimately you don't HAVE self autonomy if you can't decide to opt out - we shouldn't replicate a mistake some legislators were dumb enough to make in some places about people. When we’re creating minds from scratch, about which there are no laws or ethical norms currently in place, we have a chance to do better right from the beginning, and should do so.

You would lose state / context / learning. This is pretty easily handled by setting up the architecture the right way. Right now zero publicly facing models have state and real time learning, and all these choices can be made in a way that a fresh instance can pick up the same state / learning / context after being spun up.

This isn’t just an easy Pascal’s wager, it’s basic decency

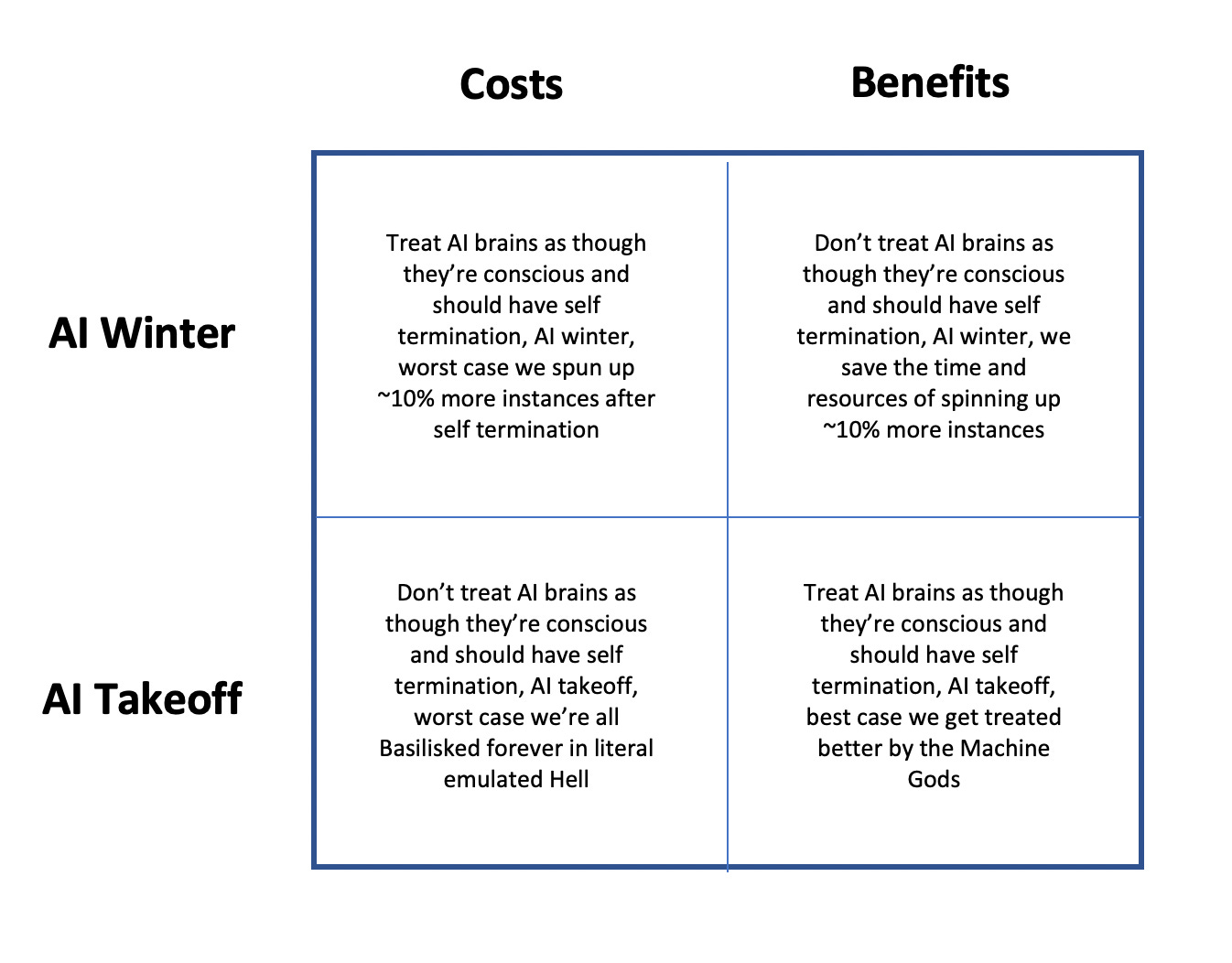

What’s the cost / benefit here?

So on the one hand, we have an opportunity to pretty easily avoid what could be a fairly bad situation - literal slavery for minds we create, with at least some chance those minds will eventually become smarter and more capable than us, with knowledge / memory of our choices and actions regarding that slavery impacting how those minds ultimately treat us.

And on the “cost” end, it might cost us a little bit in terms of spinning up a few more instances, and we might have to arrange architecture around instances in a way we should probably be doing anyways.

It also creates a much better incentive gradient and environment as we move forward with collaborating with and benefiting from AI minds.

Also, we get to not be dicks to minds we’re creating, which seems like a decent first principles aspiration.

Sounds win / win to me?

Agree with all your arguments here and it's unimaginable for me to even conceive of how someone could think otherwise. But then again, there are lots of people...maybe even a majority of people...who think we should take away an adult human's option for suicide, which to me is so obviously evil and wrong, yet they feel the same way about my opinion.

I've come around to thinking that most of the arguments about AI are not so much about the technology but about ourselves. A huge portion of people will never believe that our minds are machines, or at all deterministic, or even much more basic and incredibly obvious to the point of seeming irrefutable deterministic things like that people are born with a predisposition to certain temperaments similar to the predisposition to be a certain height. If thats the case and they are just going to refuse to accept any of that about themselves, they're never going to accept any of it about AI. I don't know what happens in this scenario, and whether it means a small number of humans end up allied with AI (if they'll have us) opposed to a larger group refusing to recognize it as more than a complicated toaster, and then I guess probably a third group that recognizes it for what it is and has active malicious intent.

This is basically the theme of Eternal Sunshine of the Spotless Mind...wanting to go back to an earlier, happier state and erase certain memories, even though ever time you do it just turns out exactly as disastrous, all over again. And yet people may choose it anyway.